To be able to debug Apache Airflow using Visual Studio Code, we first want to build a Docker image from the sources. Start by cloning the apache airflow github repository and then open the folder using VS Code.

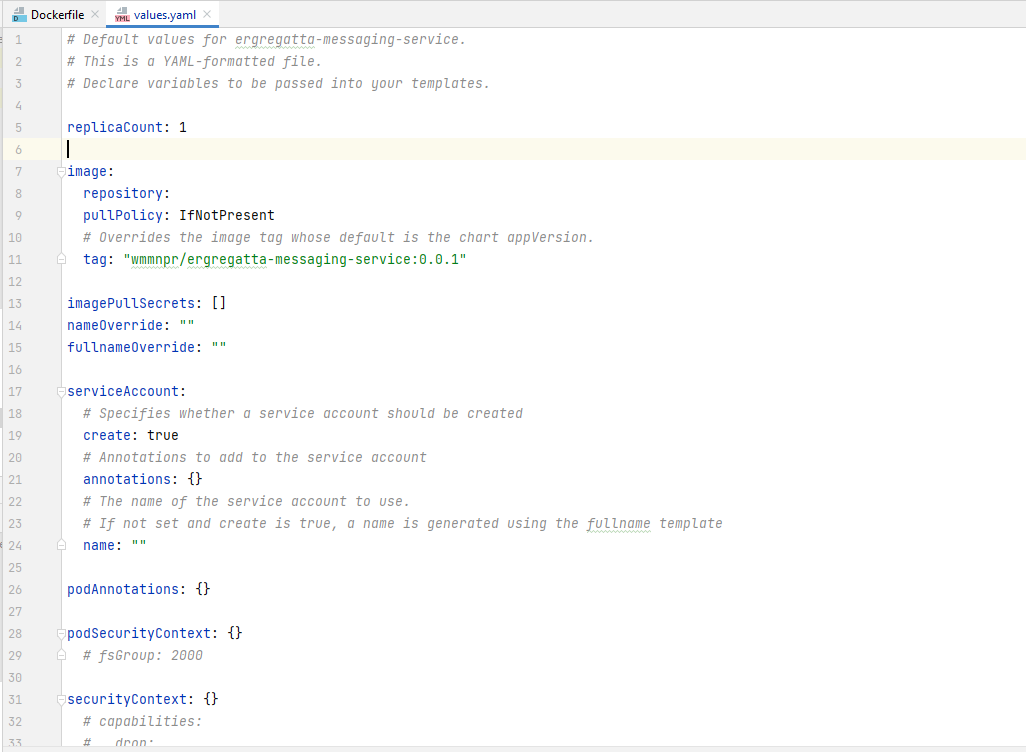

In the Dockerfile, change the following values:

ARG AIRFLOW_INSTALLATION_METHOD="."

ARG AIRFLOW_SOURCES_WWW_FROM="airflow/www"

ARG AIRFLOW_SOURCES_WWW_TO="/opt/airflow/airflow/www"

ARG AIRFLOW_SOURCES_FROM="."

ARG AIRFLOW_SOURCES_TO="/opt/airflow"

Then build the image with the new settings and then run it but overriding the entry point:

docker build -t my-image:0.0.1 -f Dockerfile .

docker run -p 8080:8080 -p 5678:5678 --entrypoint /bin/bash -it my-image:0.0.1

In the container, first install debugpy.

We'll need the installed location of the airflow code for our launch.json configuration file. You can find it by running:

python -m pip -V

pip 21.3.1 from /home/airflow/.local/lib/python3.7/site-packages/pip (python 3.7)

Now, while in the running container, start airflow, here I'll just call --help, in the container with:

python -m debugpy --listen 0.0.0.0:5678 --wait-for-client -m airflow --help

In the cloned repository directory, create a launch.json file in the .vscode directory. The value for remoteRoot should be taken from the output of the "python -m pip -V" above

"version": "0.2.0",

"configurations": [

{

"name": "Python: Remote Attach",

"type": "python",

"request": "attach",

"justMyCode": false,

"connect": {

"host": "localhost",

"port": 5678

},

"pathMappings": [

{

"localRoot": "${workspaceFolder}/airflow",

"remoteRoot": "/home/airflow/.local/lib/python3.7/site-packages/airflow"

}

]

}

]

}

In VS Code open the __main__.py file in the apache folder of the project and place your break points. Now run the debug using the launch.json file:

Of course the "Here we go!!!!" is from me :>)