The task was to search my Ultra Deep WGS VCF from nebula.org for all variants with a clinical significance of pathogenic. The webtool provided by nebula, gene.io.bio, is invaluable if one already knows which genes one would like to investigate but is not suited for searching all the variants which might have been found in one's sample. In my case, the variant calling file from nebula was nearly 256 MB, compressed.

To perform such a search, it was necessary to enrich my nebula.org VCF with the CLNSIG information provided by ClinVar in their VCF reference file.

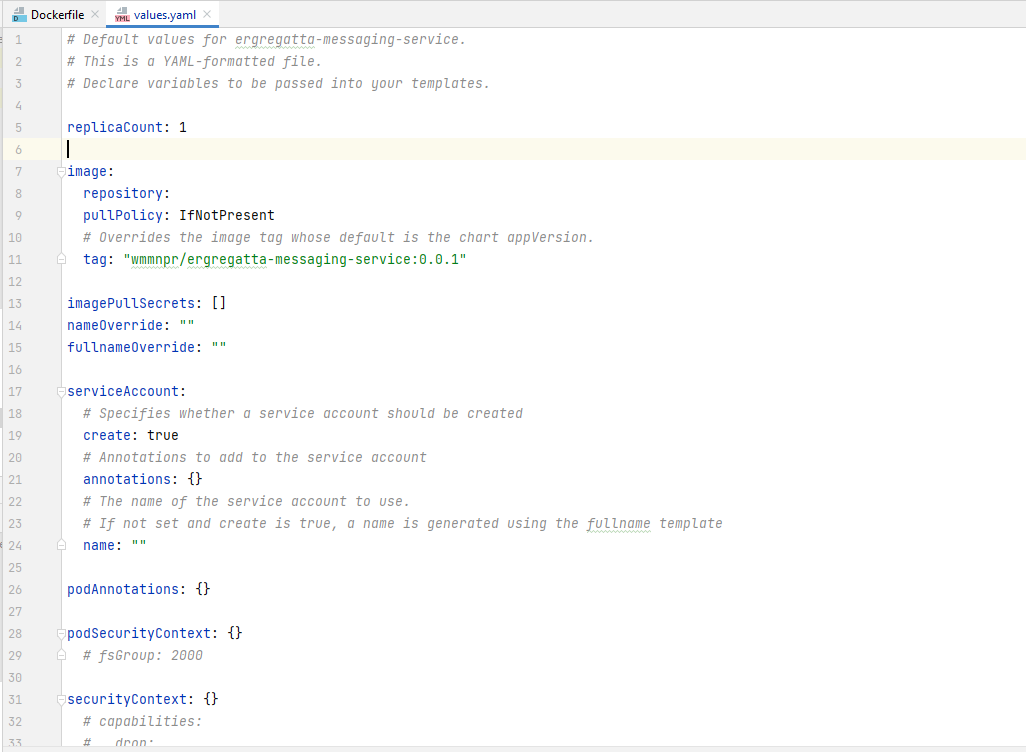

The tool which ultimately helped me produce my desired results is called bcftool. Rather than installing the tool locally, I used it in a Docker container running in Docker Desktop (v4.16.1) on my MacBook Pro (Sonoma 14.5). A subdirectory in my home folder on my Mac contained all the download files, which I mostly downloaded using my browser.

#use bcftool supplied in docker image to avoid local installation

docker run -it -v $HOME/nebula_data:/data mcfonsecalab/variantutils

A small issue was that one of the join columns, CHROM, is not the same between the variant reference file from ClinVar and the VCF from nebula; the step with awk is to prefix the chromosome number in the ClinVar file with "chr".

Below are the steps (don't forget that the command must be executed from within the docker container)

#download VCF file from nebula to localhost

MYNEBULAVCF.mm2.sortdup.bqsr.hc.vcf.gz

#index VCF if not downloaded from nebula

tabix -p vcf MYNEBULAVCF.mm2.sortdup.bqsr.hc.vcf

#download ClinVar VCF reference

wget ftp://ftp.ncbi.nlm.nih.gov/pub/clinvar/vcf_GRCh38/clinvar.vcf.gz

#decompress the clinvar.vcf.gz and copy it to a new file

bgzip -d clinvar.vcf.gz

cp clinvar.vcf clinvar.vcf.txt

#add "chr" to the chromosome number (CHROM) in the decompressed ClinVar file so that it will joined with the nebula VCF on CHROM and POS fields

awk '{if($1 ~ /^[0-9XYM]/) print "chr"$1substr($0, length($1) + 1); else print $0}' clinvar.vcf.txt > clinvar-adjusted.vcf.txt

#compress the adjusted file and index it

bgzip clinvar-adjusted.vcf.txt

tabix -p vcf clinvar-adjusted.vcf.txt.gz

#annotate file

bcftools annotate -a clinvar-adjusted.vcf.txt.gz -c CHROM,POS,INFO/CLNSIG -o MYNEBULAVCF-annotated.vcf -O z HHTLNHYJ4.mm2.sortdup.bqsr.hc.vcf.gz

#add gene name to VCF enriched file

wget ftp://ftp.ebi.ac.uk/pub/databases/gencode/Gencode_human/release_42/gencode.v42.annotation.gtf.gz

#decompress

bgzip -d gencode.v42.annotation.gtf.gz

#convert to BED file format

cat gencode.v42.annotation.gtf | awk '$3 == "gene"' | awk 'BEGIN{OFS="\t"} {print $1, $4-1, $5, $14}' > gencode_genes.bed

#add information to INFO field from gencode_genes.bed

bcftools annotate -a gencode_genes.bed -h <(echo '##INFO=<ID=GENE,Number=1,Type=String,Description="Gene name">') -c CHROM,FROM,TO,GENE -o HHTLNHYJ4-annotated_with_genes.vcf -O z HHTLNHYJ4-annotated.vcf

#filter pathogenic variants with grep

grep Pathogenic MYNEBULAVCF-annotated_with_genes.vcf > MYNEBULAVCF-pathogenic-filtered.vcf

#get just gene name

grep Patho MYNEBULAVCF-annotated_with_genes.vcf | sed -n 's/.*GENE="\([^"]*\)".*/\1/p'

#sort, filter unique and make a comma separated list

sort -u risk-genes.txt | tr '\n' ','

#gene list can be used in the gene.io.bio website

PERM1, ABCA4, EHBP1, WNT10A, AIMP1, ENPP1, SBDS, PRSS1, ADRA2A, ASXL3

Finally, take the list and use it in gen.io.bio as shown below: